Unlocking the Digital Age: How Claude Shannon's Communication Theory Still Rules

Hey there, fellow curious minds! Have you ever stopped to think about how we communicate in this wild, wonderful digital world of ours?

From that perfectly timed GIF you sent to your friend to the crystal-clear video call with your family across the globe, it all seems to just… work, right?

But behind that seamless experience lies a fascinating, almost magical, foundational idea.

Today, we're diving deep into a paper that, frankly, changed everything: "A Mathematical Theory of Communication" by Claude E. Shannon.

Trust me, even if math makes your eyes glaze over, this isn't some dusty old textbook read.

It's the blueprint for the information age, and understanding it is like peeking behind the curtain of modern technology.

Ready to unravel the secrets of how information truly flows? Let’s go!

---

Table of Contents

- The Big Bang of Information: Where It All Began

- So, What Exactly *Is* This Theory Anyway?

- Entropy: The Measurement of Surprise (and Information)

- Fighting the Static: Noise and Channel Capacity

- From Theory to Reality: Shannon's Enduring Legacy

- Why Shannon Still Matters in Our AI-Driven World

- Wrapping It Up: A Nod to the Master

---

The Big Bang of Information: Where It All Began

Imagine the world before 1948. Communication was, well, a bit messier.

Engineers were doing incredible things with telegraphs and telephones, but they were largely working on intuition, patching things up as they went along.

There wasn't a unified framework, a grand theory, for understanding how information itself behaved.

Enter Claude Shannon, a brilliant mind working at Bell Labs.

He wasn't content with just making things work; he wanted to understand *why* they worked, and how they could work *better*.

He saw communication not just as transmitting signals, but as sending "information," a concept that, surprisingly, hadn't been rigorously defined.

In 1948, he dropped "A Mathematical Theory of Communication" like a mic on the world stage.

It was published in the Bell System Technical Journal and, let me tell you, it was revolutionary.

Suddenly, information wasn't some fuzzy concept; it was a quantifiable entity.

It was something you could measure, manipulate, and even protect from the chaos of the real world.

Think of it like this: before Newton, people knew apples fell from trees, but he gave us the *laws* of gravity.

Shannon did that for information.

He gave us the underlying principles, the fundamental laws, of how information is created, transmitted, and received.

---

So, What Exactly *Is* This Theory Anyway?

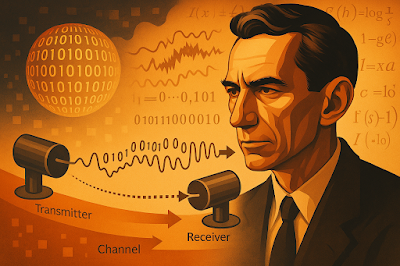

At its core, Shannon’s theory provides a **mathematical model of communication**.

He broke down the entire process into several key components, which, once you see them, seem incredibly intuitive, almost obvious.

But no one had formalized it quite like he did.

Imagine a sender (like you, reading this) trying to convey a message (this very blog post) to a receiver (your brain!).

Shannon's model looks something like this:

- Information Source: This is where the message originates. It could be your voice, a picture, a text document – anything that holds information.

- Transmitter: This component encodes the message into a signal suitable for transmission. Think of your phone converting your voice into electrical signals.

- Channel: This is the medium through which the signal travels. It could be a copper wire, fiber optic cable, or even the airwaves for Wi-Fi.

- Noise Source: Ah, the villain of our story! Noise is anything that interferes with the signal and distorts the message. Static on a radio, interference on a phone call, or even just a noisy environment.

- Receiver: This takes the corrupted signal and tries to decode it back into the original message.

- Destination: The final recipient of the message.

Sounds simple, right? The genius is in how he quantified the *information* and the *impact of noise*.

He wanted to know: how much information can we reliably send through a noisy channel?

And that, my friends, led to some truly mind-bending concepts.

---

Entropy: The Measurement of Surprise (and Information)

This is where things get really cool, and maybe a little counter-intuitive at first.

When Shannon talked about "information," he wasn't talking about meaning or semantics.

He didn't care if your message was a love poem or a grocery list.

What he cared about was **unpredictability** or **surprise**.

The more unexpected a message is, the more "information" it contains.

Think about it: if I tell you "the sun will rise tomorrow," that's not much information, is it?

You already know that! It's highly predictable.

But if I tell you "I just won the lottery and I'm buying everyone a spaceship," now THAT'S information!

It's highly improbable and therefore, highly informative.

Shannon called this concept **entropy**, borrowing a term from physics.

In thermodynamics, entropy often refers to disorder, but in information theory, it’s a measure of the uncertainty or randomness of a source.

The higher the entropy, the more information it can convey.

This concept allows us to measure information in bits (binary digits).

A single bit can represent two equally likely outcomes, like a coin flip (heads or tails).

If you have more possible outcomes, you need more bits to represent the information.

This idea became the bedrock for everything from data compression (think about how your photos shrink in file size) to error correction codes (which ensure your emails arrive intact).

It’s about squeezing the most "surprise" out of every signal.

---

Fighting the Static: Noise and Channel Capacity

Okay, so we have information, and we can measure it.

But what about the pesky problem of noise?

Life, as we know, isn't perfect, and neither are communication channels.

Noise is the arch-nemesis of clear communication.

It's the fuzz on your radio, the crackle on your phone line, the corrupted pixels on a low-bandwidth video stream.

Shannon didn't just acknowledge noise; he quantified its impact.

And here's where his most mind-blowing achievement comes in: **the Channel Capacity Theorem**.

This theorem, often simply called Shannon's Law, states that for any given communication channel with a certain amount of noise, there's a **maximum rate at which information can be transmitted reliably** without errors.

This maximum rate is called the **channel capacity**, and it’s typically measured in bits per second (bps).

Think of it like a highway. There's a maximum speed limit (channel capacity) that cars (information) can travel safely (reliably) without crashing (errors), given the condition of the road (noise).

What's truly incredible is that Shannon proved that you *can* achieve this maximum capacity, even in the presence of noise, by using clever coding schemes.

It’s like saying, "Yes, there's static, but we can design our signals so smart that we still get every single piece of information through perfectly, as long as we don't try to send it too fast."

This wasn't just theoretical musing; it was a direct challenge to engineers.

It set a clear, quantifiable goal for how good their communication systems *could* be.

No more guesswork! We had a target.

---

From Theory to Reality: Shannon's Enduring Legacy

If you're using a smartphone, streaming a movie, or sending an email right now, you are living in Shannon's world.

His theory isn't just an academic curiosity; it's the invisible scaffolding of the digital age.

Let's look at some tangible impacts:

Data Compression:

Remember that concept of entropy and measuring surprise? This directly led to algorithms that remove redundancy from data.

When you compress a JPEG image or an MP3 song, you're leveraging Shannon's insights.

We're literally taking out the "un-surprising" bits to make files smaller without losing crucial information.

This is why you can store thousands of photos on your phone or stream high-definition video over the internet without constantly running out of space or bandwidth.

Error Correction Codes:

How does your email arrive perfectly, even if it traveled across continents and through dozens of servers?

Shannon's work on channel capacity inspired the development of sophisticated error correction codes.

These codes add a little bit of "redundancy" (the opposite of compression) to the message in a smart way, so that if some parts get corrupted by noise, the receiver can still reconstruct the original message.

Think of it like adding a checksum to a package – if something goes missing, you can tell, and sometimes even fix it.

Without these, every digital interaction would be a frustrating mess of garbled data.

Digital Communication Systems:

From Wi-Fi and cellular networks to satellite communication and deep-space probes, every modern communication system is designed with Shannon's principles in mind.

Engineers use his formulas to determine how fast data can be sent, how much power is needed, and how robust the system needs to be to overcome noise.

His work provided the theoretical limits, guiding innovation and pushing the boundaries of what was thought possible.

---

Why Shannon Still Matters in Our AI-Driven World

You might be thinking, "That's all great, but it's 2025! We have AI and quantum computing. Is this stuff still relevant?"

Absolutely, my friend. More than ever!

In fact, Shannon's theory is undergoing a fascinating resurgence, finding new applications and deeper interpretations in the age of big data and artificial intelligence.

Machine Learning and Neural Networks:

Concepts like information gain and entropy are fundamental in decision trees and other machine learning algorithms.

When an AI model is learning, it's essentially trying to reduce the "uncertainty" (entropy) about a given input to make accurate predictions.

Understanding how much information a particular feature provides is crucial for building efficient and effective AI models.

Data Science and Big Data:

Managing and transmitting massive amounts of data is a daily challenge.

Shannon's principles guide how we compress, store, and transmit this data efficiently, ensuring its integrity and accessibility.

Without these foundational ideas, our data centers would be bursting at the seams, and our internet speeds would crawl to a halt.

Quantum Information Theory:

Even in the cutting-edge field of quantum computing and quantum communication, Shannon's classical information theory serves as a crucial reference point.

Researchers are exploring how his concepts can be extended or adapted to the strange and wonderful world of quantum mechanics, paving the way for truly secure and powerful communication methods.

So, whether you're chatting with a chatbot, streaming a movie in 8K, or watching a self-driving car navigate traffic, remember that behind the scenes, Shannon's elegant mathematical framework is tirelessly at work, ensuring that the information gets where it needs to go, reliably and efficiently.

---

Wrapping It Up: A Nod to the Master

It's truly remarkable how one paper, penned by a brilliant mathematician over 75 years ago, continues to shape our technological landscape.

Claude Shannon didn't just build a theory; he laid the groundwork for an entire scientific discipline: **information theory**.

He showed us that information isn't just data; it's a fundamental quantity that can be measured, manipulated, and understood with mathematical precision.

His insights freed us from the limitations of analog communication and ushered in the digital revolution, making our interconnected world possible.

So, the next time you send a text, stream a video, or marvel at the speed of the internet, take a moment to appreciate the genius of Claude Shannon.

He truly was the unsung hero who taught us how to talk to machines, and how machines could talk to each other, with clarity and confidence, even in a noisy world.

It’s a powerful reminder that even the most abstract mathematical ideas can have the most profound, practical impact on our daily lives.

And that, I think, is pretty darn cool.

---

Dive Deeper into the World of Information!

Curious to learn more about the giants who shaped our digital world or the fascinating principles that govern it?

Check out these authoritative resources:

Information Theory, Claude Shannon, Channel Capacity, Entropy, Digital Communication

Read Full Article